Performance testing ensures your applications gracefully handle the anticipated—and even the unanticipated—demands of real-world use. Locust, an open-source load testing framework, and Kubernetes, a container orchestration system, offer a potent mix for streamlining and scaling your performance testing efforts.

Why Locust?

Python Power : Locust uses Python to define user behavior, making test creation intuitive and flexible. If you can code it, Locust can simulate it.

Distributed Testing : Effortlessly simulate massive numbers of concurrent users by running Locust in a distributed mode.

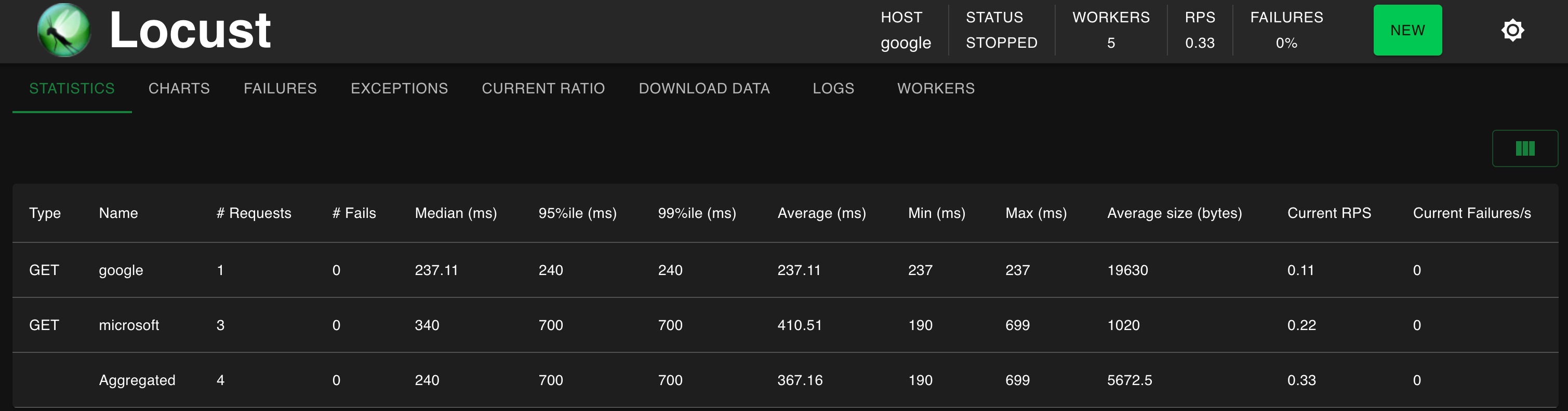

Elegant Web UI : Monitor your tests in real-time, gain deep insights into performance metrics, and identify bottlenecks thanks to Locust’s user-friendly interface.

Why Kubernetes?

Scalability : Seamlessly provision and manage the resources your Locust deployment needs. Spin up workers as required and scale down when testing is complete.

Resilience : Kubernetes protects your test environment. If a worker node goes down, it automatically restarts pods, minimizing test disruption.

Portability : Replicate your Locust test infrastructure across different environments (testing, staging, production) with ease.

Step 1 : Create kubernetes manifests

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

---

apiVersion: v1

kind: ConfigMap

metadata:

name: locust-script-cm

data:

locustfile.py: |

from locust import HttpUser, between, task

import time

class Quickstart(HttpUser):

wait_time = between(1, 5)

@task

def google(self):

self.client.request_name = "google"

self.client.get("https://google.com/")

@task

def microsoft(self):

self.client.request_name = "microsoft"

self.client.get("https://microsoft.com/")

@task

def facebook(self):

self.client.request_name = "facebook"

self.client.get("https://facebook.com/")

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

labels:

role: locust-master

app: locust-master

name: locust-master

spec:

replicas: 1

selector:

matchLabels:

role: locust-master

app: locust-master

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

role: locust-master

app: locust-master

spec:

containers:

- image: locustio/locust

imagePullPolicy: Always

name: master

args: ["--master"]

volumeMounts:

- mountPath: /home/locust

name: locust-scripts

ports:

- containerPort: 5557

name: bind

- containerPort: 5558

name: bind-1

- containerPort: 8089

name: web-ui

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

volumes:

- name: locust-scripts

configMap:

name: locust-script-cm

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

labels:

role: locust-worker

app: locust-worker

name: locust-worker

spec:

replicas: 1 # Scale it as per your need

selector:

matchLabels:

role: locust-worker

app: locust-worker

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

role: locust-worker

app: locust-worker

spec:

containers:

- image: locustio/locust

imagePullPolicy: Always

name: worker

args: ["--worker", "--master-host=locust-master"]

volumeMounts:

- mountPath: /home/locust

name: locust-scripts

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

resources:

requests:

memory: "1Gi"

cpu: "1"

limits:

memory: "1Gi"

dnsPolicy: ClusterFirst

restartPolicy: Always

volumes:

- name: locust-scripts

configMap:

name: locust-script-cm

---

apiVersion: v1

kind: Service

metadata:

labels:

role: locust-master

name: locust-master

spec:

type: ClusterIP

ports:

- port: 5557

name: master-bind-host

- port: 5558

name: master-bind-host-1

selector:

role: locust-master

app: locust-master

---

apiVersion: v1

kind: Service

metadata:

labels:

role: locust-ui

name: locust-ui

spec:

type: LoadBalancer

ports:

- port: 8089

targetPort: 8089

name: web-ui

selector:

role: locust-master

app: locust-master

Step 2 : Scale

If you want to scale more worker to support more requests per seconds, you can do that by just scaling up the worker pods

1

kubectl scale --replicas=5 deploy/locust-worker -n locust

1

2

3

4

5

6

locust-master-74c9f6db7c-klk4l 1/1 Running 0 18m

locust-worker-6674d66d5-kgxlp 1/1 Running 0 6s

locust-worker-6674d66d5-m8bdf 1/1 Running 0 6s

locust-worker-6674d66d5-r9v7p 1/1 Running 0 6s

locust-worker-6674d66d5-z2w4x 1/1 Running 0 6s

locust-worker-6674d66d5-zfswz 1/1 Running 0 19m